Value lines

Digital society and public values

Technologies have a powerful transformative potential. They are not just passive, neutral tools that we use to fulfil pre-defined functions. As they are introduced, and as we interact with them, they destabilize existing values which ground and give meaning to our interactions, societal institutions, economies and political structures. iHub’s research centers on core public values which are at stake in digitalization, such as Privacy & Security, Solidarity & Justice, Freedom & Democracy, Expertise & Meaningful work. These value-lines structure our research: for each one we research how the values in question are affected by digitalization and how these values can be protected.Research aims

The research conducted at iHub has two aims: to identify and map the beneficial and detrimental impacts of digitalization, and to provide solutions that can support or address them. Roughly speaking, these can be thought of as our “theoretical” and “applied” arms. Thus, on the one hand we engage in theoretical work to clarify how digitalization destabilizes norms, practices and laws. On the other, we seek to develop value-driven solutions that can both help secure and achieve the desirable effects of digitalization, and limit and pre-empt its undesirable ones, based on this more theoretical research. Such solutions can be technical (e.g. privacy by design), and will be developed and tested in the hub’s iLab, but they can also be normative (e.g. updating regulations and laws), and institutional (e.g. new governance frameworks). Work in both research arms is interdisciplinary, drawing on frameworks, insights and methods provided by the pool of disciplines collaborating in the hub.

Democracy & Freedom

Smart technologies increasingly influence and “nudge” human behavior in ways that users are unaware of. In this value line we research how our freedom is impacted as we go about our lives interacting with digital technologies both on- and off-line, when this is acceptable, and how this impacts the democratic functioning of our societies. Is the notion of informed consent sufficient to ensure a meaningful sense of freedom and autonomy? If not, how can it be updated legally, ethically, and technically? Can positive conceptions of freedom be translated into a world where digital surveillance is increasingly normalized? Do filter bubbles, micro-targeting and fake news really reshape politics? Alongside questions of freedom, this value line explores the power of big tech and platforms on our democratic institutions and the public domain, and the need to achieve digital sovereignty and autonomy: how does the power to develop and design necessary infrastructures, such as platforms for news provision, primary education and public health, undermine democratic control and accountability?

Solidarity & Justice

Digitalization and datafication are increasingly being used to predict who will benefit from a medical treatment, who will default on a loan payment, who the most appropriate hire is, or what educational program best fits a pupil. For example, by deploying AI and predictive analytics. While this can yield many benefits, by generating categories for differentiating between people, these technologies also risk reinforcing existing inequalities, along gender, ethnic and socio-economic lines, or creating new types of inequalities based on new data profiles. This can weaken solidarity – the sense of reciprocity and obligations of mutual aid we have as citizens – and it can undermine inclusivity and equal access, which underpin our health, education, welfare and legal systems. At iHub we research how the development and implementation of AI and other technologies can be done in ways that ensure solidarity and (sector-specific) ideals of justice and fairness: Where do non-discrimination law and ethical guidelines need updating? Can fairness be designed into AI, and if so, how? How do we build technologies that are optimized for increased access to public services?

Privacy, Security & Digital Autonomy

Privacy is a core value and fundamental principle of democratic societies. Yet privacy, and its correlate security, are constantly under strain in data-rich environments, such as online platforms or medical research using large datasets. This is because digital data can flow between different contexts much easier than data in the paper-age did. For example, data generated on a mobile app used to monitor physical activity, such as steps, can be sold to third parties, such as advertisers or health insurers. When data travel between contexts in this way privacy is easily violated. This is a legal issue but also a moral one: our expectations of privacy can be violated even if terms and conditions that we consent to stipulate that this sharing of data will take place. At iHub we explore the subjective, social, legal, ethical and technical dimensions of privacy and security: what does privacy mean for people in different contexts? How can we achieve digital sovereignty? How can privacy-by-design technologies in which European public values are embedded, such as Yivi and PubHubs developed by iHub researchers, be applied in new contexts?

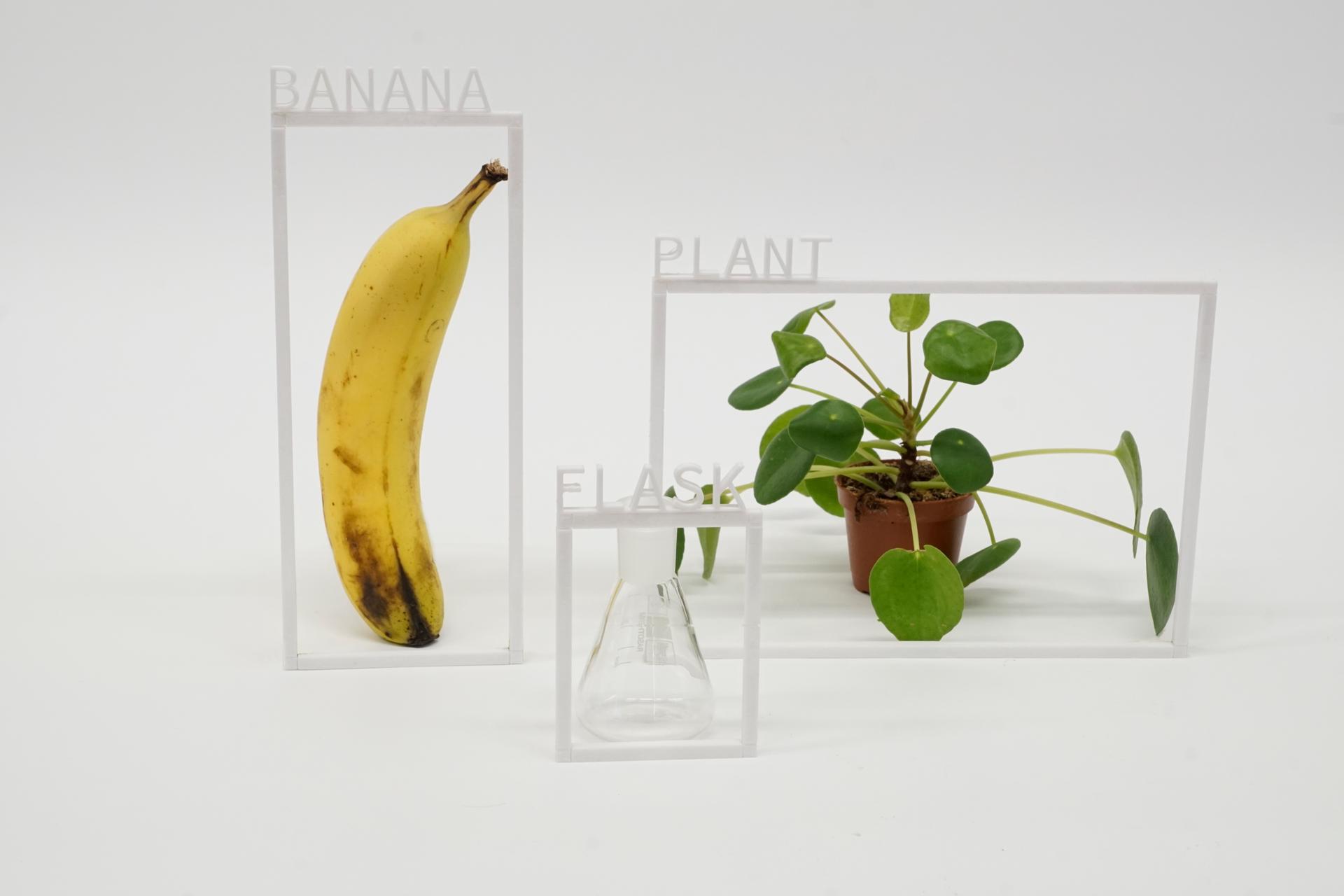

Max Gruber / Better Images of AI / Banana / Plant / Flask / CC-BY 4.0